![]()

One highly requested feature of AWS’s Application Load Balancer (ALB) is the ability to assign static IP addresses. Unfortunately, ALBs do not support this feature and it is unlikely they will in the near future. Today, the only way to achieve static IP addresses for your application behind an ALB is to add another layer in between the client and your ALB which does have a static IP address, and then forward requests to your ALB. In this blog post, we will go over two solutions you can implement to get static IP addresses for your ALB.

In this blog:

Downsides to static IPs

Before we get into specifics about how to set up static IPs, let’s go over some limitations. For one, we want to continue using an Application Load Balancer in our network stack. There are many reasons to keep using an ALB. Perhaps you are using WAF and rely on that functionality to secure your app. You also may have many routes configured in the ALB, and there is a lot of functionality that would be difficult and costly to reproduce using other solutions.

The second limitation is that we do not want to manually set up and configure our own load balancer or proxy servers, and will instead stick with managed solutions in AWS. If you are comfortable configuring your own load balancer, then you should seriously consider replacing your ALB completely so you can get static IP addresses without any of the drawbacks of using AWS-only solutions.

Option 1: Use AWS Global Accelerator

One way to implement static IP addresses is to use AWS Global Accelerator. Global accelerator supports static anycast IP addresses, meaning you can have a fixed set of IP addresses route traffic to your load balancers or network interfaces in multiple regions, and AWS will manage it all for you. The main drawback of Global Accelerator is price, and you are charged per GB of data transferred over the network, with prices depending on both the source and destination of traffic.

If you are already using Global Accelerator to provide low-latency API access for your users, then cost may not be a concern. For any small to medium AWS setup that does not benefit from the other features of Global Accelerator, or for applications that are ingesting lots of data, Global Accelerator is likely too expensive. Another drawback of Global Accelerator is that you will lose the client IP address of your requests. The X-Forwarded-For header in requests to your application will contain the IP address of an edge node in accelerator, not the actual client IP address.

Option 2: Use an NLB + Lambda function

The other method for setting up static IPs is to use a Network Load Balancer (NLB) in front of your ALB. This solution is presented in a blog post by AWS, and is the solution I decided to use for Blue Matador’s use case. The original blog post briefly describes the solution but leaves out some details about how the Lambda function works so I will cover that below.

Additionally we use Terraform to manage our infrastructure configuration at Blue Matador instead of CloudFormation, and there was not a clear way to run this solution using Terraform. I will include sample Terraform code throughout so you can see where my solution deviates from the blog post, and quickly run the solution yourself.

Finally, the IAM policy suggested in the blog post is way too permissive and it is not clear if or how this solution can be used if you have more than one listener on the ALB that needs to receive traffic, so we will cover that as well.

|

Blue Matador automatically monitors your AWS Lambda functions, ALBs, NLBs, and target groups so you don’t have to. Try it free > |

How to set up a static IP in AWS with Terraform

In order to follow my Terraform configuration, you will need to set up your provider and some variables. Additionally, my config is written using Terraform v0.12. Older versions may work, but Terraform syntax was changed in 0.12 so changes may be required. In these examples we will assume you are running in the region us-east-1 and that you have availability zones set up correctly with private and public subnets created in us-east-1a, us-east-1b, and us-east-1d. You can simply switch out the names and values for the region and AZs for the ones actually used in your application.

Start off by setting up your provider:

provider "aws" {

region = "us-east-1"

version = "2.7.0"

}

Next, you must make sure that you have an internal ALB to send traffic to. If you are currently using a publicly accessible ALB, you can simply create identical target groups, register targets to them, and then create a second ALB that is internal. Now you can fill in values for the following variables that will be used in the rest of the Terraform config:

variable "vpc_id" {

type = string

}

variable "public_subnet_us_east_1a" {

type = string

}

variable "public_subnet_us_east_1b" {

type = string

}

variable "public_subnet_us_east_1d" {

type = string

}

variable "alb_dns_name" {

type = string

}

In this example we are using 3 subnets, but if you are using more or fewer then adjust your Terraform configuration accordingly. These subnets should correspond to Availability Zones that match the zones your ALB runs in.

Next, we will allocate the Elastic IPs that will be our static IP addresses. You will need to allocate one EIP for each zone that you run in:

resource "aws_eip" "app_us_east_1a" {

tags = {

"Name" = "app_us_east_1a"

}

}

resource "aws_eip" "app_us_east_1b" {

tags = {

"Name" = "app_us_east_1b"

}

}

resource "aws_eip" "app_us_east_1d" {

tags = {

"Name" = "app_us_east_1d"

}

}

Now we will create the Network Load Balancer. The subnet_mapping keys are what maps each EIP to the corresponding subnet for the NLB and ensure that all connections through this NLB use our static IPs. Ensure that internal is false if you need the NLB to be publicly accessible.

resource "aws_lb" "app_public" {

name = "app-public"

load_balancer_type = "network"

internal = false

subnet_mapping {

subnet_id = var.public_subnet_us_east_1a

allocation_id = aws_eip.app_us_east_1a.id

}

subnet_mapping {

subnet_id = var.public_subnet_us_east_1b

allocation_id = aws_eip.app_us_east_1b.id

}

subnet_mapping {

subnet_id = var.public_subnet_us_east_1d

allocation_id = aws_eip.app_us_east_1d.id

}

}

Now we will set up the target groups for our NLB. In the AWS blog post, they do not cover the case where you need to forward traffic for multiple ports on the NLB so in our example we will specifically handle both port 80 and port 443 so you can see how this works. We set the proxy_protocol_v2 option to false since it does not work with ALB. You may be tempted to try setting this to true to preserve the client IP address, but you will only end up with 400 responses from the ALB since it does not understand Proxy Protocol v2. Unfortunately, this solution has the same issue as the Global Accelerator solution, and client IP addresses will not make it to your ALB. You can however look at VPC flow logs to correlate requests to client IP addresses.

We are also using the TCP protocol, even for port 443, so that TLS is not terminated at the NLB since we will let the ALB handle that. The target_type must be ip since we will be forwarding traffic to the underlying servers supporting the ALB, not to our own EC2 instances.

resource "aws_lb_target_group" "app_public_80" {

name = "app-public-80"

port = 80

protocol = "TCP"

target_type = "ip"

vpc_id = var.vpc_id

proxy_protocol_v2 = false

}

resource "aws_lb_target_group" "app_public_443" {

name = "app-public-443"

port = 443

protocol = "TCP"

target_type = "ip"

vpc_id = var.vpc_id

proxy_protocol_v2 = false

}

Now, we set up our NLB listeners to send traffic to our target groups. Set the default_action to simply forward all requests to the appropriate target group:

resource "aws_lb_listener" "app_public_80" {

load_balancer_arn = "${aws_lb.app_public.arn}"

port = "80"

protocol = "TCP"

default_action {

type = "forward"

target_group_arn = "${aws_lb_target_group.app_public_80.arn}"

}

}

resource "aws_lb_listener" "app_public_443" {

load_balancer_arn = "${aws_lb.app_public.arn}"

port = "443"

protocol = "TCP"

default_action {

type = "forward"

target_group_arn = "${aws_lb_target_group.app_public_443.arn}"

}

}

Now we have an NLB set up with listeners and target groups on the appropriate ports. The problem now is that there are not any targets in the target groups, so the traffic will not go anywhere. This is where things get complicated.

Since the servers that make up your ALB will change over time as your application scales, we need to dynamically add the IP addresses of the AWS-managed servers as targets for your NLB target groups. This means essentially periodically querying DNS and then figuring out which target IPs to add or remove based on the DNS response from your ALB. The AWS blog post provides a Lambda function that automatically handles this process for us, so that is what we will use.

This Lambda function works by querying your ALB’s DNS to get the IP addresses of the AWS-managed servers that make up your ALB. Then, a target is registered on your target group for each IP address. These results are also stored in S3 so they can be compared on the following runs, and targets can be added and removed as the servers in your ALB autoscale. We use CloudWatch events to run the function every minute so that the configuration is never stale, and your NLB should always have an up-to-date target list.

To run this Lambda function, we first need to create an S3 bucket to keep track of the target IP addresses. The function is actually fairly feature-rich and supports quickly registering new targets, slowly deregistering old targets, and uses very few resources to run. Simply create a new private bucket with a unique name:

resource "aws_s3_bucket" "static_lb" {

bucket = "static-lb"

acl = "private"

region = "us-east-1"

versioning {

enabled = true

}

}

Now we will create an IAM role for the function to run. I have modified the permissions needed to be more restrictive than the example in the blog post, namely by restricting TargetGroup actions to the target groups we actually need, and restricting S3 access to only the permissions needed by the Lambda function:

resource "aws_iam_role_policy" "static_lb_lambda" {

name = "static-lb-lambda"

role = aws_iam_role.static_lb_lambda.id

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": [

"arn:aws:logs:*:*:*"

],

"Effect": "Allow",

"Sid": "LambdaLogging"

},

{

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": [

"${aws_s3_bucket.static_lb.arn}/*"

],

"Effect": "Allow",

"Sid": "S3"

},

{

"Action": [

"elasticloadbalancing:RegisterTargets",

"elasticloadbalancing:DeregisterTargets"

],

"Resource": [

"${aws_lb_target_group.app_public_80.arn}",

"${aws_lb_target_group.app_public_443.arn}"

],

"Effect": "Allow",

"Sid": "ChangeTargetGroups"

},

{

"Action": [

"elasticloadbalancing:DescribeTargetHealth"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "DescribeTargetGroups"

},

{

"Action": [

"cloudwatch:putMetricData"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "CloudWatch"

}

]

}

EOF

}

resource "aws_iam_role" "static_lb_lambda" {

name = "static-lb-lambda"

description = "Managed by Terraform"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

Now you need to download the Lambda function. You can get the zip file here. I searched for this code on Github but was unable to find anything. Any changes you want to make must be managed yourself. The code used in the AWS blog post has one potential issue that I decided to fix, but you may skip it if you want.

The problem is if you have multiple target groups sending traffic to a single ALB. The Lambda functions stores the target IP list and deregistration list in S3 by ALB DNS name only, meaning you will have conflicts if you try to run multiple functions to manage multiple target groups to cover more than one port like in my example. I was able to fix this by changing the code in populate_NLB_TG_with_ALB.py:

Replace these lines:

ACTIVE_IP_LIST_KEY = "{}-active-registered-IPs/{}"\

.format(ALB_DNS_NAME, ACTIVE_FILENAME)

PENDING_IP_LIST_KEY = "{}-pending-deregisteration-IPs/{}"\

.format(ALB_DNS_NAME, PENDING_DEREGISTRATION_FILENAME)

With these lines:

ACTIVE_IP_LIST_KEY = "{}/{}/active.json".format(NLB_TG_ARN, ALB_DNS_NAME)

PENDING_IP_LIST_KEY = "{}/{}/pending.json".format(NLB_TG_ARN, ALB_DNS_NAME)

Now you will have unique S3 objects per target group and ALB combination, and do not need to worry about running multiple functions to handle multiple listening ports on the ALB. Once that is done, you can re-zip the code. This command assumes you unzipped the original contents into a directory called lambda_function:

cd lambda_function/

zip -r9 ../lambda_function.zip .

Now you will have a lambda_function.zip file. Since Lambda is sensitive to file structure, make sure your lambda_function.zip has an internal structure like this:

lambda_function.zip

dns/

dnspython-1.15.0.dist-info/

populate_NLB_TG_with_ALB.py

Now that we have a zip file with our Lambda code prepared, we can create our Lambda functions. Since we are managing two target groups, we will run two lambda functions with slightly different configurations. This means DNS will get queried for the same ALB twice, which is inefficient, but the cost is very minimal. If you’d like to improve the efficiency further, you can modify the python code to handle multiple target groups.

resource "aws_lambda_function" "static_lb_updater_80" {

filename = "lambda_function.zip"

function_name = "static_lb_updater_80"

role = "${aws_iam_role.static_lb_lambda.arn}"

handler = "populate_NLB_TG_with_ALB.lambda_handler"

source_code_hash = "${filebase64sha256("lambda_function.zip")}"

runtime = "python2.7"

memory_size = 128

timeout = 300

environment {

variables = {

ALB_DNS_NAME = var.alb_dns_name

ALB_LISTENER = "80"

S3_BUCKET = aws_s3_bucket.static_lb.id

NLB_TG_ARN = aws_lb_target_group.app_public_80.arn

MAX_LOOKUP_PER_INVOCATION = 50

INVOCATIONS_BEFORE_DEREGISTRATION = 10

CW_METRIC_FLAG_IP_COUNT = true

}

}

}

resource "aws_lambda_function" "static_lb_updater_443" {

filename = "lambda_function.zip"

function_name = "static_lb_updater_443"

role = "${aws_iam_role.static_lb_lambda.arn}"

handler = "populate_NLB_TG_with_ALB.lambda_handler"

source_code_hash = "${filebase64sha256("lambda_function.zip")}"

runtime = "python2.7"

memory_size = 128

timeout = 300

environment {

variables = {

ALB_DNS_NAME = var.alb_dns_name

ALB_LISTENER = "443"

S3_BUCKET = aws_s3_bucket.static_lb.id

NLB_TG_ARN = aws_lb_target_group.app_public_443.arn

MAX_LOOKUP_PER_INVOCATION = 50

INVOCATIONS_BEFORE_DEREGISTRATION = 10

CW_METRIC_FLAG_IP_COUNT = true

}

}

}

Notice that the only configuration difference is the ALB_LISTENER variable, which is the port on the ALB we need to send traffic to, and the NLB_TG_ARN which is specific to the target group we need to register IPs on. S3_BUCKET is the bucket we created earlier, and where IP lists are stored so they can be compared between runs. MAX_LOOKUP_PER_INVOCATION is needed because a single DNS lookup for your ALB will return only up to 8 IP addresses. If the lookup returns exactly 8 IP addresses, then it is performed MAX_LOOKUP_PER_INVOCATION times in an effort to get every IP address. According to the AWS blog, it should take less than 40 lookups to get the full set of IP addresses for your ALB. INVOCATIONS_BEFORE_DEREGISTRATION controls the deregistration process. This number is the number of times that an IP address must have been missing before the Lambda function deregisters it from your target group. I set this value to 10 so that targets are only removed after 10 minutes. ALB servers will be removed from DNS results well before they are actually terminated, so this should not be an issue. The CW_METRIC_FLAG_IP_COUNT variable just tells the Lambda function to keep track of the current number of IPs each ALB has in CloudWatch, and is completely optional.

Now that we have our Lambda functions created, there is one last step: triggering the functions. This is accomplished by using CloudWatch Events to trigger the Lambda functions every minute. First we create a CloudWatch Event Rule that triggers every minute, and set our Lambda functions as targets:

resource "aws_cloudwatch_event_rule" "cron_minute" {

name = "cron-minute"

schedule_expression = "rate(1 minute)"

is_enabled = true

}

resource "aws_cloudwatch_event_target" "static_lb_updater_80" {

rule = "${aws_cloudwatch_event_rule.cron_minute.name}"

target_id = "TriggerStaticPort80"

arn = "${aws_lambda_function.static_lb_updater_80.arn}"

}

resource "aws_cloudwatch_event_target" "static_lb_updater_443" {

rule = "${aws_cloudwatch_event_rule.cron_minute.name}"

target_id = "TriggerStaticPort443"

arn = "${aws_lambda_function.static_lb_updater_443.arn}"

}

Next, we must add permissions to each Lambda function to allow them to be triggered by Cloudwatch:

resource "aws_lambda_permission" "allow_cloudwatch_80" {

statement_id = "AllowExecutionFromCloudWatch"

action = "lambda:InvokeFunction"

function_name = "${aws_lambda_function.static_lb_updater_80.function_name}"

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.cron_minute.arn

}

resource "aws_lambda_permission" "allow_cloudwatch_443" {

statement_id = "AllowExecutionFromCloudWatch"

action = "lambda:InvokeFunction"

function_name = "${aws_lambda_function.static_lb_updater_443.function_name}"

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.cron_minute.arn

}

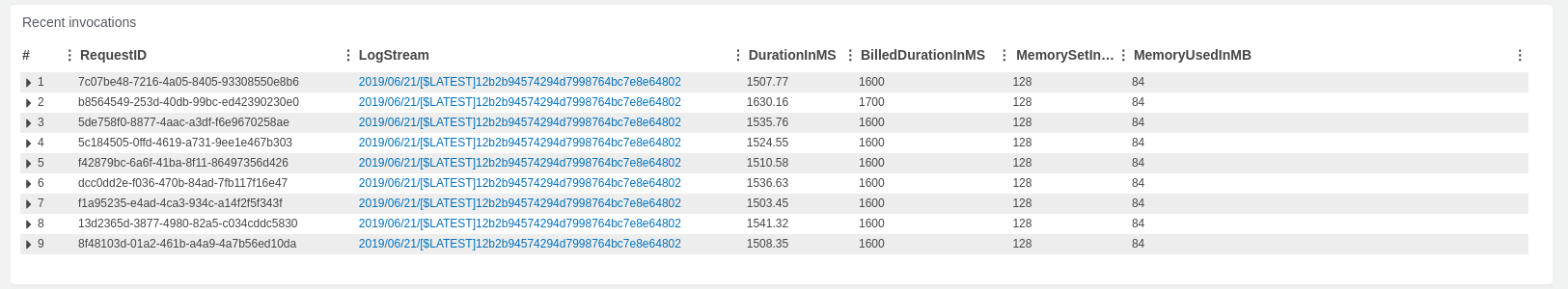

Now that everything is set up, you should be able to see your Lambda function invocations every minute:

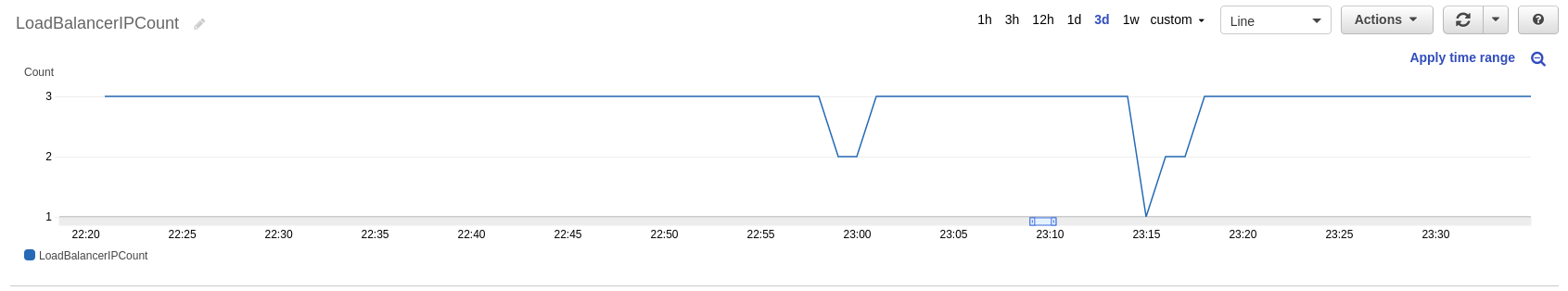

You can also check out the CloudWatch metric if you enabled it, located in the AWS/ApplicationELB namespace then LoadBalancerName > LoadBalancerIPCount:

Conclusion

Normally I would say that you’ve now learned everything you need to know to set up static IPs for your AWS Application Load Balancer. The truth is, the solution using an NLB and Lambda function to update targets is very complex and there are dozens of opportunities to configure things incorrectly. If you are having issues with the configuration, just retrace your steps and double-check everything. It’s also important to really understand what is going on when we add an NLB in front of an ALB, and why each step of the setup is required.

If complex systems like this are something you deal with often, you probably need a way to monitor them. Blue Matador automatically monitors your AWS Lambda functions, ALBs, NLBs, and target groups so you don’t have to. Use Blue Matador to get hundreds of alerts automatically set up to monitor all of your resources.