- Home

- Blog

- Kubernetes

- Kubernetes on AWS: EKS vs Kops

![]()

There are three popular methods for running Kubernetes on AWS: manually set up everything on EC2 instances, use Kops to manage your cluster, or use Amazon EKS to manage your cluster. Managing a Kubernetes cluster on AWS without any tooling is a complicated process that is not recommended for most administrators, so we will focus on using EKS or Kops. In this blog post, we compare cluster setup, management, and security features for both Kops and EKS to determine which solution you should use.

Get our free best-practices guide to running Kubernetes.

What Is AWS EKS?

If you thought EKS was an acronym for “Elastic Kubernetes Service”, you’re not alone but you are incorrect. The full name is actually Amazon Elastic Container Service for Kubernetes, which you can promptly forget since only Amazon uses it.

Amazon EKS was made generally available in June 2018 and is described as a “Highly available, scalable, and secure Kubernetes service”. This kind of description in the Kubernetes space might lead you to believe you can have a 1-click Kubernetes cluster setup, and you would again be mistaken. Instead, EKS fully manages just the Kubernetes control plane (master nodes, etcd, api server), for a flat usage fee of $0.20 per hour or ~$145 per month. The tradeoff to this is that you do not have access to the master nodes at all, and are unable to make any modifications to the control plane.

What Is Kops?

Kubernetes Operations (kops) is a CLI tool for “Production Grade K8s Installation, Upgrades, and Management”. Kops has been around since late 2016, well before EKS existed.

Kops significantly simplifies Kubernetes cluster set up and management compared to manually setting up master and worker nodes. It manages Route53, AutoScaling Groups, ELBs for the api server, security groups, master bootstrapping, node bootstrapping, and rolling updates to your cluster. Since kops is an open source tool it is completely free to use, but you are responsible for paying for and maintaining the underlying infrastructure created by kops to manage your Kubernetes cluster.

|

Blue Matador automatically checks for over 25 Kubernetes events and over 20 AWS services in conjunction with Kubernetes, providing full coverage for your entire production environment with no alert configuration or tuning required. Start a free trial > |

Kubernetes Cluster Setup

The first point to consider when evaluating Kubernetes solutions on AWS is how difficult it is to set up a working Kubernetes cluster.

Setting Up a Kubernetes Cluster on EKS

Setting up a cluster with EKS is fairly complicated and has some prerequisites. You must set up and use both the AWS CLI and aws-iam-authenticator to authenticate to your cluster, so there is some overhead with setting up IAM permissions and users. Since EKS does not actually create worker nodes automatically with your EKS cluster, you must also manage that process.

Amazon provides instructions and CloudFormation templates that can accomplish this, but some of them may have to be modified to work with your specific requirements such as encrypted root volumes or running nodes in private subnets. We recently wrote a blog post about setting up EKS using the AWS documentation, and ran into several issues. I highly recommend reading that post if you are going the CloudFormation route to avoid some major pitfalls -- specifically, do not use the root user on your AWS account to create your EKS cluster in the AWS web console.

If you prefer to use terraform over CloudFormation for infrastructure management, you can use the eks module to set up your cluster. In order to use this module you must already have a VPC and subnets set up for EKS, which can also be done using terraform. This module includes many options for creating worker nodes, including setting up a Spot Fleet for your workers, and can also optionally update your kubeconfig files to work with aws-iam-authenticator. Some of the documentation on the module is incomplete, and I had to refer to the variables.tf and local.tf files in the Github repo for the project in order to fully configure the workers to my specification. Once the terraform configuration was correct though, I was impressed that terraform generated a kubeconfig that worked with aws-iam-authenticator without me having to copy and paste values from the AWS console. Below is an example terraform config that creates an EKS cluster with several options set.

provider "aws" {

region = "us-east-1"

version = "2.3.0"

}

module "eks_k8s1" {

source = "terraform-aws-modules/eks/aws"

version = "2.3.1"

cluster_version = "1.12"

cluster_name = "k8s"

Vpc_id = "vpc-00000000"

subnets = ["subnet-00000001", "subnet-000000002", "subnet-000000003"]

cluster_endpoint_private_access = "true"

cluster_endpoint_public_access = "true"

write_kubeconfig = true

config_output_path = "/.kube/"

manage_aws_auth = true

write_aws_auth_config = true

map_users = [

{

user_arn = "arn:aws:iam::12345678901:user/user1"

username = "user1"

group = "system:masters"

},

]

worker_groups = [

{

name = "workers"

instance_type = "t2.large"

asg_min_size = 3

asg_desired_capacity = 3

asg_max_size = 3

root_volume_size = 100

root_volume_type = "gp2"

ami_id = "ami-0000000000"

ebs_optimized = false

key_name = "all"

enable_monitoring = false

},

]

tags = {

Cluster = "k8s"

}

}

The method for setting up EKS that works best for you is probably the one closest to what you already use. If you are trying to set up EKS in an existing VPC, use whatever tool you are already using to manage your VPC. When using EKS in a new VPC, either CloudFormation or Terraform are great choices to manage all of the related resources.

Setting up a Kubernetes Cluster on Kops

Kops is a CLI tool and must be installed on your local machine alongside kubectl. Getting a cluster running is as simple as running the kops create cluster command with all of the necessary options. Kops will manage most of the AWS resources required to run a Kubernetes cluster, and will work with either a new or existing VPC. Unlike EKS, kops will create your master nodes as EC2 instances as well, and you are able to access those nodes directly and make modifications. With access to the master nodes, you can choose which networking layer to use, choose the size of master instances, and directly monitor the master nodes. You also have the option of setting up a cluster with only a single master, which might be desirable for dev and test environments where high availability is not a requirement. Kops also supports generating terraform config for your resources instead of directly creating them, which is a nice feature if you use terraform.

Below is an example command for creating a cluster with 3 masters and 3 workers in a new VPC. Instructions for getting an appropriate AMI for your nodes can be found here.

kops create cluster \

--cloud aws \

--dns public \

--dns-zone ${ROUTE53_ZONE} \

--topology private \

--networking weave \

--associate-public-ip=false \

--encrypt-etcd-storage \

--network-cidr 10.2.0.0/16 \

--image ${AMI_ID} \

--kubernetes-version 1.10.11 \

--master-size t2.medium \

--master-count 3 \

--master-zones us-east-1a,us-east-1b,us-east-1d \

--master-volume-size 64 \

--zones us-east-1a,us-east-1b,us-east-1d \

--node-size t2.large \

--node-count 3 \

--node-volume-size 128 \

--ssh-access 10.0.0.0/16 \

${CLUSTER_NAME}

Winner: Kops

I found kops to be the quickest way to get a fully functioning cluster running in a brand new VPC. It is a tool specifically created by the Kubernetes on AWS community, and works very well at doing that one thing. EKS on the other hand is still a relatively new service for AWS, and there is a lot of extra hassle to get things running with IAM, managing worker nodes, and configuring your VPC. Tools like CloudFormation and Terraform make setting up EKS easier, but it is clearly not a completely solved problem at this time.

Kubernetes Cluster Management

Cluster setup is a rare event. When evaluating a solution that will affect a critical piece of your infrastructure like Kubernetes, you must also consider what it is like to scale nodes, perform cluster upgrades, and integrate with other services down the road.

Managing a Kubernetes Cluster on EKS

The extra effort required to set up EKS using either CloudFormation or Terraform pays off when it comes to cluster maintenance. EKS has a prescribed way to upgrade the Kubernetes version of the control plane with minimal disruption. Your worker nodes can then be updated by using a newer AMI for the new version of Kubernetes, creating a new worker group, then migrating your workload to the new nodes. The process of moving pods to new nodes is described in another of our blog posts here. Scaling up your cluster with EKS is as simple as adding more worker nodes. Since the control plane is fully managed, you do not have to worry about adding or upgrading master sizes when the cluster gets larger.

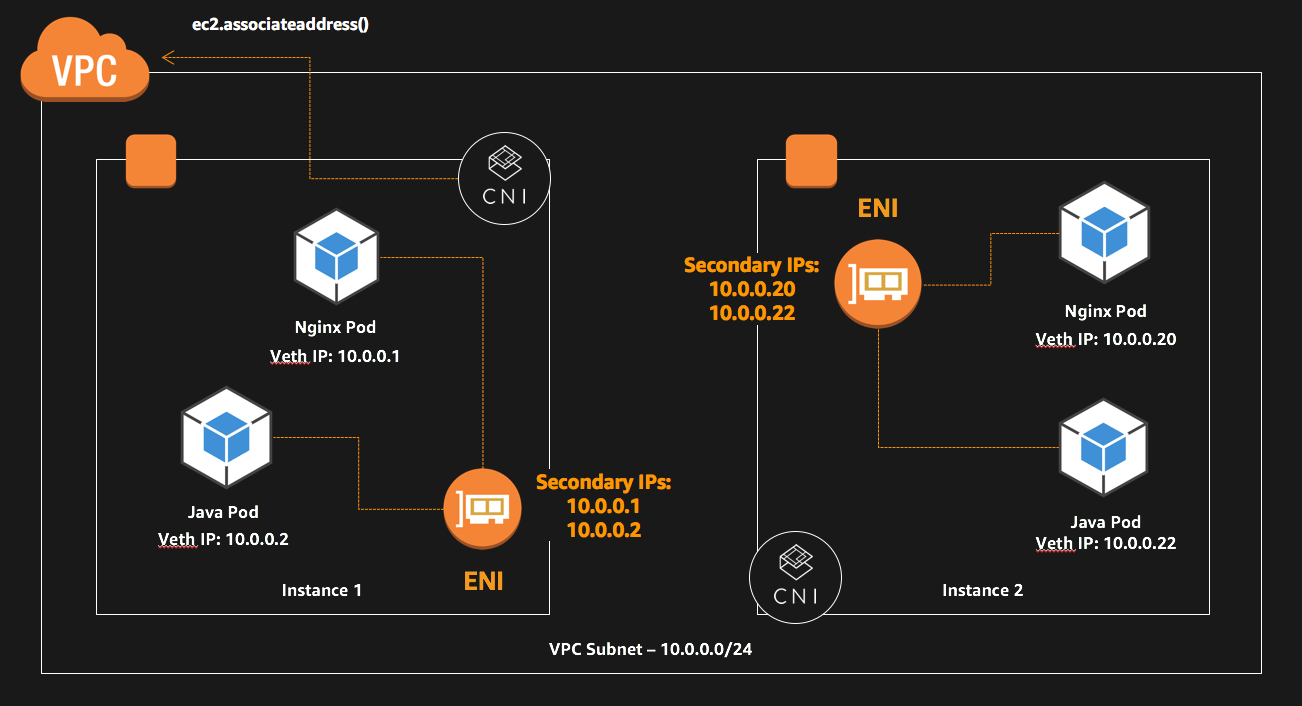

One area that EKS lacks scalability is the way it handles networking. In most Kubernetes setups, pods will live in a virtual network inside of your Kubernetes cluster that is only visible from within the cluster. With EKS, pods share the same network as the VPC that the cluster was created in. This means that each pod takes up a private IP address in the subnets you used to create the cluster, and each worker node has to attach multiple network interfaces to manage these IPs.

Source: AWS Documentation

By using the VPC network directly instead of a virtualized network, you can run into several issues. For one, anything in the VPC will be in the same network as your pods, so you must rely on VPC security groups if you wish to restrict access to your pods. Other solutions include running your cluster in a separate VPC or installing Calico to implement network segmentation. Another issue you may run into is IP Address per ENI and ENI per Instance limits in EC2. In my above example setups I used t2.large instances which are limited to 3 ENIs and 12 IPs per ENI, for a total of 36 ip addresses. While it is unlikely I would actually try to run 36 pods on a t2.large, if your workload consists of many small containers you may run into this issue. Lastly, you will also be limited by the number of IP addresses in the subnets you used for the EKS cluster. For example, if your cluster was created using /24 subnets in 3 zones, you would be limited to around 750 total ips in your cluster (depending on what else is i your VPC), creating a possible bottleneck in the future that will be hard to fix if you suddenly run out of IP addresses while scaling up.

Networking considerations aside, it is actually quite easy to perform the most common maintenance tasks. You can add workers by increasing the size of your AutoScaling Group, replace workers using kubectl drain and then terminating the EC2 instance, and do most upgrades with little disruption to your cluster.

Managing a Kubernetes Cluster on Kops

Your experience managing a Kops cluster will largely depend on how and when you need to scale, and what other tools you use to manage AWS resources. I have been managing our production cluster at Blue Matador in Kops for almost 2 years, and have had to go through major Kubernetes upgrades, changing worker node types, and replacing dead worker nodes on several occasions.

What I’ve learned while using kops is that kops is really great at creating a cluster quickly, but only okay at managing it later. One pain point is that you must do a lot of ground work to upgrade and replace master nodes for new versions of Kubernetes. When the vulnerability CVE-2018-1002105 was discovered in 2018, I found myself upgrading multiple major versions of Kubernetes in less than a day. I detailed the process in this blog post, and I found it much easier to just create a new cluster in a new VPC with kops than to upgrade the master and data nodes one at a time for multiple versions of Kubernetes. I have made major changes to our production cluster multiple times using this method without any service disruption, and I would recommend it over using the kops edit command. I do have a bit of separate configuration in terraform to manage our primary ELB and VPC peering connection to make these cluster replacements possible, but this method provides more peace of mind that I cannot make a mistake or break some functionality on a new version of Kubernetes. I have also noticed in general that kops is a little further behind on Kubernetes versions than the EKS team, which will become more of a liability when the next major vulnerability is found.

For the mundane tasks of adding, replacing, and upgrading worker nodes, kops is very similar to EKS. AWS is doing most of the heavy lifting with AutoScaling Groups in both cases, and you can use the same process described in this blog post to maintain cluster stability while doing any changes to worker nodes.

Winner: EKS

Typical cluster maintenance is very similar with both EKS and kops. This makes sense given that most of the time you will be working with the worker nodes, and they are managed very similarly in both solutions. When it comes to upgrading the Kubernetes version, I found EKS to be significantly easier to work with than kops because AWS handles the tricky parts of the upgrade for you. The networking concerns with EKS should be kept in mind, especially when creating your cluster. Just make sure you are using instance types and subnet sizes that your cluster can easily grow into.

Kubernetes Security

Security should be a top concern for every Kubernetes administrator. As the Kubernetes ecosystem matures, more vulnerabilities will be found. The rate at which we find new security issues with Kubernetes is increasing, and this issue cannot be ignored. In my security evaluation of EKS and kops, I will focus on 3 areas:

- Who is responsible for security

- How easy is it to apply security patches

- The default level of security offered by each solution

Securing a Kubernetes Cluster with EKS

EKS benefits from the Amazon EKS Shared Responsibility Model which means you are not alone in making sure the control plane for your Kubernetes cluster is secure. This is ideal for Kubernetes because you will benefit from the security expertise of AWS on a platform that is still relatively new. You also get the benefit of AWS support for EKS if you are having issues with the control plane itself.

In the previous section I covered how easy it is to apply Kubernetes updates in EKS. This is another great point for EKS since security updates can be time-sensitive and you do not want to make mistakes. The Amazon EKS team is actively looking for security issues and will likely have the next patched version of Kubernetes ready very quickly when the next vulnerability is discovered.

By default, EKS clusters are set up with limited administrator access via IAM. Managing cluster permissions with IAM is more intuitive for many AWS users since they are using IAM for other AWS services as well. It is also relatively easy to set up EKS with encrypted root volumes and private networking. SInce your AWS account doesn’t have root access to the master nodes for your cluster, you have an additional layer of protection. In other setups it would be easy to accidentally open up your master nodes to SSH access from the public internet, but with EKS it is not even possible.

Securing a Kubernetes Cluster with Kops

Since kops is limited in scope to only managing the infrastructure needed to run Kubernetes, the security of your cluster is almost entirely up to you. Kops clusters still benefit from the Amazon Shared Responsibility Model as it pertains to EC2 and other services, but without the benefit of extra security expertise or support with the Kubernetes control plane itself. On the other hand, the kops community is very active in the #kops-users Slack channel and you will probably get a decent response on most questions about kops there.

Updates with kops are not difficult, but they are also not simple. Upgrading major versions of Kubernetes involves a lot of manual steps to update the masters and the nodes, but AutoScaling still does most of the work. Kops does tend to lag on support for newer Kubernetes versions a little bit. This does not mean you cannot use newer versions, but the kops tool itself is not guaranteed to work with the newest versions.

Kubernetes clusters created with kops are by default set up very much like EKS. Private networking, encrypted root volumes, and security group controls are included in most basic kops clusters. Since you have control over the master nodes, you can also further increase security there using any tools at your disposal. You can also set up IAM authentication similar to EKS, but the default authorization method for a cluster admin includes a user with a password and certificates.

Winner: EKS

The shared responsibility model for EKS is extremely valuable from a security perspective. Being able to learn on the security expertise of the EKS team and AWS support is hard to match with kops. Also, following most guides to set up EKS will result in a cluster that is secure and reliable, whereas with kops it will be easier to make critical mistakes on the master nodes that can expose your cluster. As far as security updates go, I feel more confident that EKS will have updates quicker, and that I can apply them easier, than I do with kops.

Both solutions ignore an important security aspect of Kubernetes: controlling pod access to your AWS resources. You can read more about this issue in my blog series where I compare two popular solutions to pod IAM access: kube2iam and kiam. One thing to be aware of is that kiam will not work with EKS without significant changes in configuration, since it relies on master nodes to run part of the IAM authentication pods. Kube2iam however will work in any Kubernetes cluster.

Overall winner: EKS

When I started investigating EKS for this post, I was convinced that these solutions were so similar that I could not declare a winner. I was put off by the complexity of the EKS “Getting Started” guides and the amount of configuration required to get my first cluster running compared with how easy it is in kops. However, after digging deeper into the purpose being served by EKS, I can confidently declare it the winner for running Kubernetes on AWS.

At first, the very narrow scope of EKS was alarming. I was upset that I could not just scale up worker nodes with the EKS UI easily. I expected more. As I dove in and used the service more, I’ve grown to appreciate what they are doing. Fully managing the control plane gives me immense peace of mind when it comes to upgrade strategy and security of the Kubernetes cluster. At the same time, by leaving worker node management to the user EKS is giving us a lot of flexibility for managing the most important part of a Kubernetes cluster: our pods on the worker nodes.

This is not to say kops should not be used. I find kops to be great at creating Kubernetes clusters quickly and cheaply, and the kops community is amazing. Kops also makes it possible to use bleeding-edge builds of Kubernetes if you desire it. There are also guides for using their tool on GCP and DigitalOcean, while EKS is obviously only usable in AWS. Kudos to the kops team for helping Kubernetes on AWS expand to the point that EKS even exists.

If you are looking for a monitoring solution for Kubernetes on AWS, whether it be a kops-managed cluster or an EKS cluster, consider Blue Matador. Blue Matador automatically checks for over 25 Kubernetes events out-of-the-box. We also monitor over 20 AWS services in conjunction with Kubernetes, providing full coverage for your entire production environment with no alert configuration or tuning required.